The Transformer Diagram Decoded: A Deep Dive into Neural Network Architecture (2025 Guide)

Meta Description: Master the Transformer model diagram step-by-step. A 20-year engineer’s breakdown of Encoder-Decoder, Multi-Head Attention, and Tensor shapes for AI architects.

1. Transformer Diagram Explained: A Systems Engineering Deep Dive

💡 Executive Summary

The Transformer is not just software; it is a sophisticated parallel processing control system. Unlike sequential RNNs, it acts as a massive parallel switchboard allowing simultaneous token interaction (O(1) path length), serving as the foundational engine for modern Generative AI.

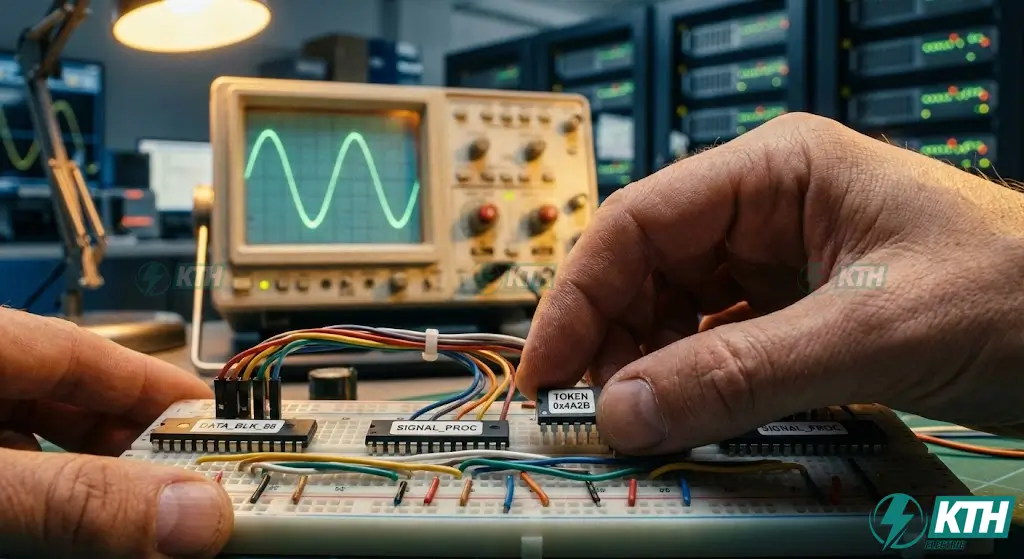

If you’ve spent decades analyzing circuit schematics and signal flow graphs like I have, the Transformer model introduced in the 2017 paper “Attention Is All You Need” feels less like a software algorithm and more like a sophisticated electrical control system. It is the engine block of the modern AI revolution—powering everything from ChatGPT to advanced machine vision.

But for many engineers and data scientists, the standard diagram remains a “black box.” You see arrows pointing everywhere, blocks labeled “Norm,” and mysterious $N \times$ stacks.

In this guide, we are going to deconstruct the Transformer diagram with the rigor of an Electrical Engineer. We won’t just look at the boxes; we will trace the “current” (data) as it flows from input voltage (tokens) to output, examining every transformer coil (attention head) and capacitor (residual connection) along the way.

1.1. The Paradigm Shift: Why This Diagram Changed Everything

Key Takeaway: The shift from sequential bucket-brigade processing (RNN) to a parallel switchboard topology (Transformer) eliminated the vanishing gradient problem and enabled infinite range dependencies.

Before 2017, we relied on Recurrent Neural Networks (RNNs) and LSTMs. Imagine an RNN as a bucket brigade: you pass a bucket of water (data) from person A to person B to person C. It’s sequential. If person A is slow, the whole line stops. Plus, by the time the bucket reaches person Z, half the water has spilled (the “vanishing gradient” problem).

The Transformer changed the topology entirely. Instead of a bucket brigade, it’s a massive parallel switchboard. It allows every word in a sentence to “talk” to every other word simultaneously, regardless of distance.

- The Old Way (RNN)

Sequential processing ($t_1 \rightarrow t_2 \rightarrow t_3$). High latency, hard to parallelize. - The Transformer Way

Parallel processing. Infinite range dependencies. $O(1)$ path length between any two signals.

1.2. The High-Level Block Diagram (The Black Box View)

System Overview: A Seq2Seq transducer comprising an Encoder Stack (Feature Extraction) and a Decoder Stack (Generative Reconstruction).

Let’s start with the satellite view before we open the chassis. At its highest level of abstraction, the Transformer is a Sequence-to-Sequence (Seq2Seq) transducer.

- Input Signal (Source): A sequence of tokens (e.g., “Hello, world”).

- The Transformer Stack: A complex network of Encoder and Decoder layers that process these signals.

- Output Signal (Target): A generated sequence of probabilities (e.g., “Bonjour, monde”).

In the standard diagram, you will see two towering stacks.

- The Left Tower (Encoder): Its job is Feature Extraction. It ingests the raw input and compresses it into a high-dimensional understanding (vectors) of context.

- The Right Tower (Decoder): Its job is Generative Reconstruction. It takes the Encoder’s understanding and autoregressively generates the output, one token at a time.

2. The Core Architecture: Encoder-Decoder Topology

💡 Architecture Insight

The Encoder creates a “Context Vector” via 6 stages of amplification (Self-Attention + FFN), while utilizing Positional Encodings to inject order into the parallel system.

Now, let’s zoom in. We are going to trace the signal path through the Encoder Block. This is where the machine “reads” and “understands.”

2.1. The Encoder Block (The Feature Extraction Circuit)

In the original paper, this block is stacked $N=6$ times. Think of this as a 6-stage amplifier, where each stage refines the signal clarity.

2.1.1. The Input Stage: Signal Conditioning

Before data enters the neural network, it must be conditioned—just like converting analog sound to digital signals.

- Tokenization: The raw text is chopped into standardized pieces (tokens).

- Input Embeddings: Each token ID is converted into a dense vector of size $d_{model}$ (usually 512). This is the “voltage” level of our system.

- Positional Encodings (The System Clock): This is critical. Since the Transformer processes everything in parallel, it has no inherent concept of “order.” It doesn’t know that “Man bites dog” is different from “Dog bites man.”

2.1.2. Multi-Head Self-Attention (The Logic Core)

This is the heart of the system. We will break this down in detail in Part 2, but for the diagram flow, understand this:

- The signal splits into three streams: Query (Q), Key (K), and Value (V).

- It functions like a content-addressable memory lookup. The model asks, “For this word I’m processing (Query), which other words in the sentence (Keys) are relevant?”

- “Multi-Head” means we run this process 8 times in parallel (in the base model). It’s like having 8 independent processors analyzing the sentence from different perspectives (grammar, tone, vocabulary, gender, etc.).

2.1.3. Position-wise Feed-Forward Networks (FFN)

After the attention mechanism mixes the information, the signal needs to be processed.

- The FFN is a simple Multi-Layer Perceptron (MLP).

- The Circuit: Linear Layer $\rightarrow$ Non-linear Activation (ReLU or GELU) $\rightarrow$ Linear Layer.

- It expands the dimension (usually to $4 \times d_{model} = 2048$) and then compresses it back. This allows the model to “think” about the associations it found in the attention layer.

2.1.4. Signal Stabilization: Add & Norm

You will see lines looping around the sub-layers, labeled “Add & Norm.”

- Residual Connection (Add): This is a bypass wire. It takes the input signal and adds it directly to the output of the sub-layer ($x + Sublayer(x)$).

- Why? It solves the “vanishing gradient” problem, allowing electricity (gradients) to flow through the network during backpropagation without resistance.

- Layer Normalization (Norm): This stabilizes the voltage. It re-centers the data (mean 0, variance 1) to ensure the network learns stably and quickly.

3. The Decoder Block Diagram: What Changes?

💡 Generative Modifications

The Decoder introduces “Masking” to enforce autoregressive properties (hiding future tokens) and “Encoder-Decoder Attention” to bridge the generated output with the source input.

The Right Tower (Decoder) looks similar but has two critical modifications required for generation.

3.1. Masked Multi-Head Attention (The “No Peeking” Circuit)

In the Decoder, we are training the model to predict the next word. If we let it see the whole sentence at once (like the Encoder does), it would be cheating.

The Mask: We apply a triangular matrix of negative infinity ($-\infty$) to the attention scores. This effectively shorts out the connections to future words. When the model tries to predict word #4, it can only “see” words #1, #2, and #3.

3.2. Encoder-Decoder Attention (The Bridge)

This is where the two towers connect.

- The Queries (Q) come from the Decoder (what the model wants to say next).

- The Keys (K) and Values (V) come from the Encoder (the context of the input source).

Analogy: This is the moment a translator (Decoder) looks back at the original document (Encoder) to find the right word to write down.

4. Anatomy of the Attention Mechanism (Visualizing Q, K, V)

💡 The Logic Gate

Attention is a retrieval system calculating the dot-product similarity between a Query and Keys to retrieve weighted Values, scaled to prevent vanishing gradients.

If the Encoder/Decoder blocks are the chassis, the Attention Mechanism is the microprocessor. This is the specific sub-circuit where the “magic” of contextual understanding happens. As engineers, we need to look past the abstract concept of “attention” and look at the linear algebra—the logic gates of this system.

4.1. The Metaphor: The Retrieval System

Think of Attention as a database lookup.

- Query (Q): What you are searching for.

- Key (K): The label or metadata of the items in the database.

- Value (V): The actual content you want to retrieve.

In the Transformer, every input token produces its own Q, K, and V vectors. The model calculates the similarity between the Query of the current token and the Keys of every other token.

4.2. The Circuit Logic: Scaled Dot-Product Attention

The diagram for this sub-module follows a precise mathematical signal path:

- MatMul ($QK^T$): We perform a matrix multiplication between the Query and the Transposed Key. This is a dot product. In vector calculus, a high dot product indicates high similarity (the vectors point in the same direction). This tells us how much focus to put on each word.

- Scale ($\frac{1}{\sqrt{d_k}}$): We divide the result by the square root of the dimension of the key vectors (usually $\sqrt{64} = 8$).

Engineering Note: Why scale? Without this, the dot products could become massive. Large values push the Softmax function into regions where gradients are extremely small (vanishing gradients), killing the learning process. This is essentially “gain control.” - Mask (Optional): As mentioned in the Decoder section, we may inject $-\infty$ here to block illegal connections.

- Softmax: This converts the raw scores (logits) into probabilities. The numbers are normalized so they all add up to 1. This is the Attention Weight.

- MatMul ($\times V$): Finally, we multiply these probabilities by the Value vectors. This effectively creates a weighted sum of the values. If the attention weight for a word is 0.9, we take 90% of its Value vector.

4.3. Multi-Head Logic: Parallel Processing

Why do we split this into “Heads”?

If we only have one attention mechanism, the model focuses on one type of relationship (e.g., “The cat sat” – relationship: subject-verb).

By splitting the 512-dimension vector into 8 heads of 64 dimensions each, the model can simultaneously attend to:

- Head 1: Syntax (Subject-Verb)

- Head 2: Pronoun resolution (Who is “it”?)

- Head 3: Prepositions (Spatial relationship)

- …and so on.

It’s the equivalent of running 8 parallel threads on a CPU, each handling a distinct sub-task, then concatenating the results back together.

5. Tensor Shape & Matrix Dimension Reference

💡 Data Flow Schematics

Understanding the wire gauge (Tensor Shapes) is critical for implementation. The flow typically moves from [Batch, Seq, d_model] to [Batch, Heads, Seq, Seq] during attention calculation, and back.

For the engineers looking to implement this in PyTorch or TensorFlow, the diagram is useless without knowing the wire gauge—or in this case, the Tensor Shapes. Misaligning these dimensions is the #1 cause of runtime errors.

Let’s trace a batch of data through the standard “Base” model:

- Batch Size ($B$): e.g., 64 sequences.

- Sequence Length ($S$): e.g., 100 tokens.

- Model Dimension ($d_{model}$): 512.

1. Input Tensor: Shape $(B, S, d_{model}) \rightarrow [64, 100, 512]$.

2. Q, K, V Projections:

We project the input into Heads.

Assume $h=8$ heads. Dimension per head $d_k = 512 / 8 = 64$.

Reshaped Tensor: $(B, S, h, d_k) \rightarrow [64, 100, 8, 64]$.

3. Attention Scores ($QK^T$):

The result represents the relationship of every token to every other token.

Shape: $(B, h, S, S) \rightarrow [64, 8, 100, 100]$. Note: This creates an $S \times S$ matrix, which is why memory usage grows quadratically $O(S^2)$ with sequence length.

4. Attention Output:

After multiplying by $V$, we are back to $(B, S, h, d_k)$.

Concatenate heads: Back to $(B, S, d_{model}) \rightarrow [64, 100, 512]$.

5. Feed Forward Network:

Expands to $4 \times$ size: $(B, S, 2048)$.

Compresses back: $(B, S, 512)$.

6. Variations of the Standard Diagram

💡 Architectural Decoupling

Modern models often use subsets of the original diagram: BERT uses Encoder-Only (Understanding), GPT uses Decoder-Only (Generation), while T5 keeps the full Encoder-Decoder structure.

Not every Transformer uses the full Encoder-Decoder schematic. Depending on the engineering requirements, we often decouple the towers.

6.1. Encoder-Only (The “BERT” Family)

Diagram: Discard the Right Tower (Decoder).

Use Case: Classification, Sentiment Analysis, Named Entity Recognition.

Logic: Since it can see the entire sentence at once (bidirectional), it is perfect for understanding context. It knows that “Bank” means “river bank” because it sees the word “river” later in the sentence.

6.2. Decoder-Only (The “GPT” Family)

Diagram: Discard the Left Tower (Encoder). Use only the Right Tower.

Use Case: Text Generation, Chatbots (ChatGPT).

Logic: It is autoregressive. It reads a prompt and predicts the next word, then feeds that word back into the input. It is a creative generator.

6.3. Encoder-Decoder (The “T5” / “BART” Family)

Diagram: The full schematic.

Use Case: Translation, Summarization.

Logic: You need to fully understand an input (Encoder) to rewrite it in a new form (Decoder).

7. Engineering FAQ: Common Architectural Queries

8. Conclusion

The Transformer diagram is more than just a flowchart; it is the schematic for the most powerful cognitive engine humanity has ever built. By replacing sequential processing with parallel attention mechanisms, it solved the bottleneck of memory and context.

For the electrical engineer or software architect, understanding this diagram is no longer optional—it is the prerequisite for working with modern AI and IoT. Whether you are fine-tuning a BERT model for fault detection or building a custom GPT for customer service, the flow of tensors remains the same.

Ready to start building?

Don’t just stare at the diagram—trace the signal. Open PyTorch, define your nn.MultiheadAttention, and watch the loss function drop.